Increased use of algorithms in the justice system could lead to ‘dehumanised justice’ and threaten human rights, according to the Law Society. Following a year-long investigation into the technology, a report by the solicitors professional body concluded that there was a lack of explicit standards and transparency about the use of algorithmic systems in criminal justice and called for greater oversight.

‘An uncritical reliance on technology could lead to wrong decisions that threaten human rights and undermine public trust in the justice system,’ the group said. At present, examples of algorithmic systems being used by police forces nationwide include facial recognition systems, DNA profiling, predictive crime mapping and hot-spotting, and mobile phone data extraction.

The Law Society’s technology and law policy commission published its report earlier this week which drew on four public sessions and interviews with over 75 experts. The increase in the use of such technology has been attributed to the resourcing pressures.

The group argued that the criminal justice was faced with ‘an avalanche of problems, including a growing shortage of duty solicitors, increasing court closures, barriers to accessing legal aid, and crucial evidence not being available until the last minute’. ‘Algorithmic systems might help bring efficiencies to the system through automation of rote tasks, or, more controversially, might take more value-laden decision support roles,’ it said.

A freedom of information based study in the United Kingdom in 2016 asked all police forces whether they used algorithms or similar approaches and 14% of the 43 forces confirmed they did. The civil liberties group Liberty undertook a similar exercise last year – making 90 freedom of information requests to police forces – and 14 returned affirmatively.

There is a long history of ‘predictive hotspot policing’ allowing for statistical forecasts about where future crime may take place, which, according to the report, entered the public consciousness through the deployment of the US PredPol software. Kent Police used PredPol for the last five years until early 2018, and are currently building their own system. In the UK, ProMap, built by researchers at the Jill Dando Institute of Crime Science, University College London, was deployed in the East Midlands in 2005/6. The report listed a number of collaborations between UK police forces and universities. For example, London Metropolitan Police partnered with University College London on a £1.4m Research Councils UK-funded project concerning geospatial predictive modelling for policing between 2012 and 2016.

According to the Law Society, the main challenge of algorithm use is the potential for discriminatory decisions to be made based on biased or oversimplified data. The report highlights that in general data is unable to articulate the complexities of an issue. ‘Relying on algorithmic systems might result in some decisions being made on a shallow view of evidence and without a deep, contextual consideration of the facts,’ the report said.

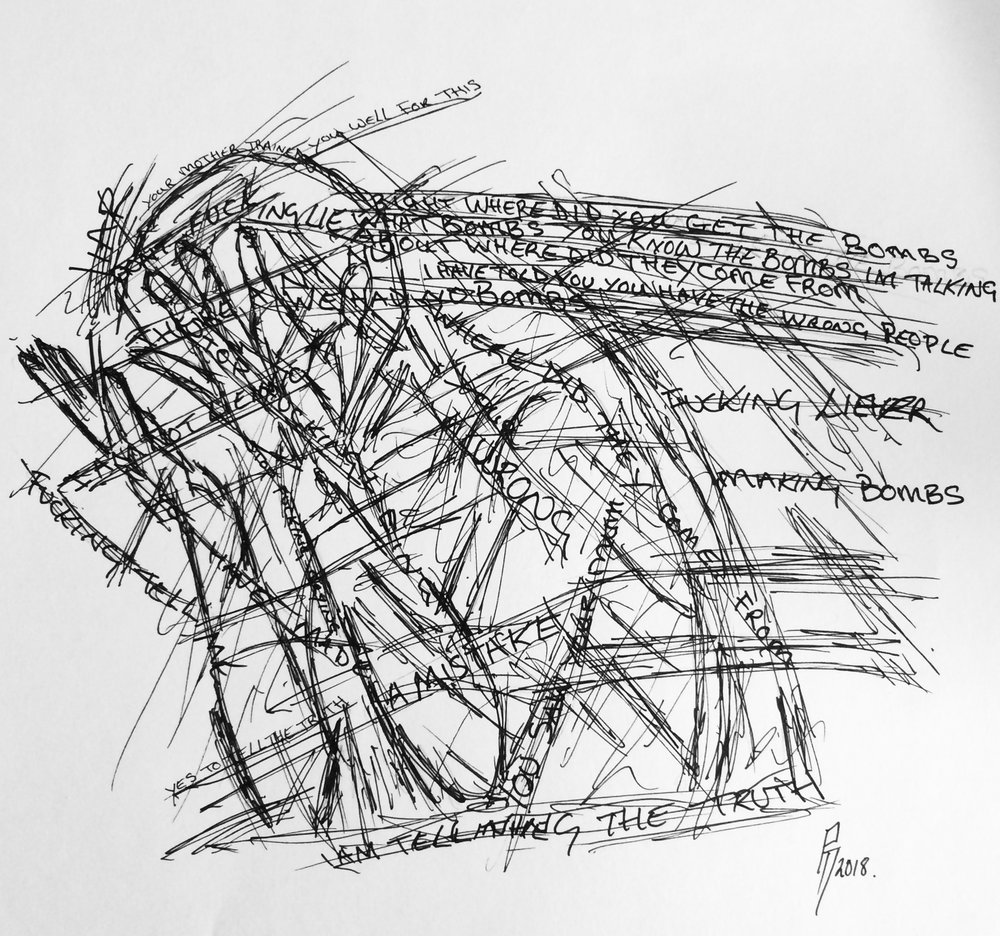

The element of human interpretability is removed from this purely algorithmic decision-making process. According to the report, the technology is making predictions and decision outcomes based on data which has been inputted and could exacerbate existing discriminatory policing policies in certain neighbourhoods.

‘If, as is commonly known, the justice system does under-serve certain populations or over-police others, these biases will be reflected in the data, meaning it will be a biased measurement of the phenomena of interest, such as criminal activity.’

The Law Society’s technology and law policy commission

The report highlight risks that stem from what are ‘effectively policy decisions baked into algorithmic systems being made invisibly and unaccountably by contractors and vendors’. The Law Society group argues that ‘political design choices should never be outsourced’ since checks and balances on the technology cannot be properly maintained if they are.

The report also asserts that with the right framework, algorithmic systems can benefit the justice system by providing efficiency, efficacy, auditability and consistency. Its recommendations include developing oversight mechanisms including the creation of a National Register of Algorithmic Systems to make it simpler to analyse what is already out there; declaring the lawful basis of algorithmic systems in the criminal justice system in advance of their use which would inevitably require clarifying and changing legislation pertaining to data protection, freedom of information, procurement and equality duties; and educating public bodies on the appropriate and responsible use of this technology.

For the full report, click link.